We value your feedback and insights. If you have suggestions, ideas, or questions, feel free to comment on existing issues. Engaging in discussions helps shape Monty's direction and ensures that the community's needs and concerns are considered.

Please consider the following when commenting.

Remember the people involved in the Pull Request. Be welcoming. If there is a conflict or coming to an agreement is difficult, having an audio or video call can help. Remember to always be polite and that everyone is just trying to help in their own way.

When commenting on issues, please favor gaining an understanding of what is being communicated. What is the other person's context?

We encourage everyone to reproduce Bug Reports. If you generate a reproduction, please comment on the Issue with your reproduction to verify the problem.

If you begin working on a Feature Request, it is helpful to let people know. While Feature Requests are not assigned to specific people, in some cases, it may be beneficial to discuss them with others who are also working on them.

📘 This page is about contributing Tutorials

See here for current tutorials.

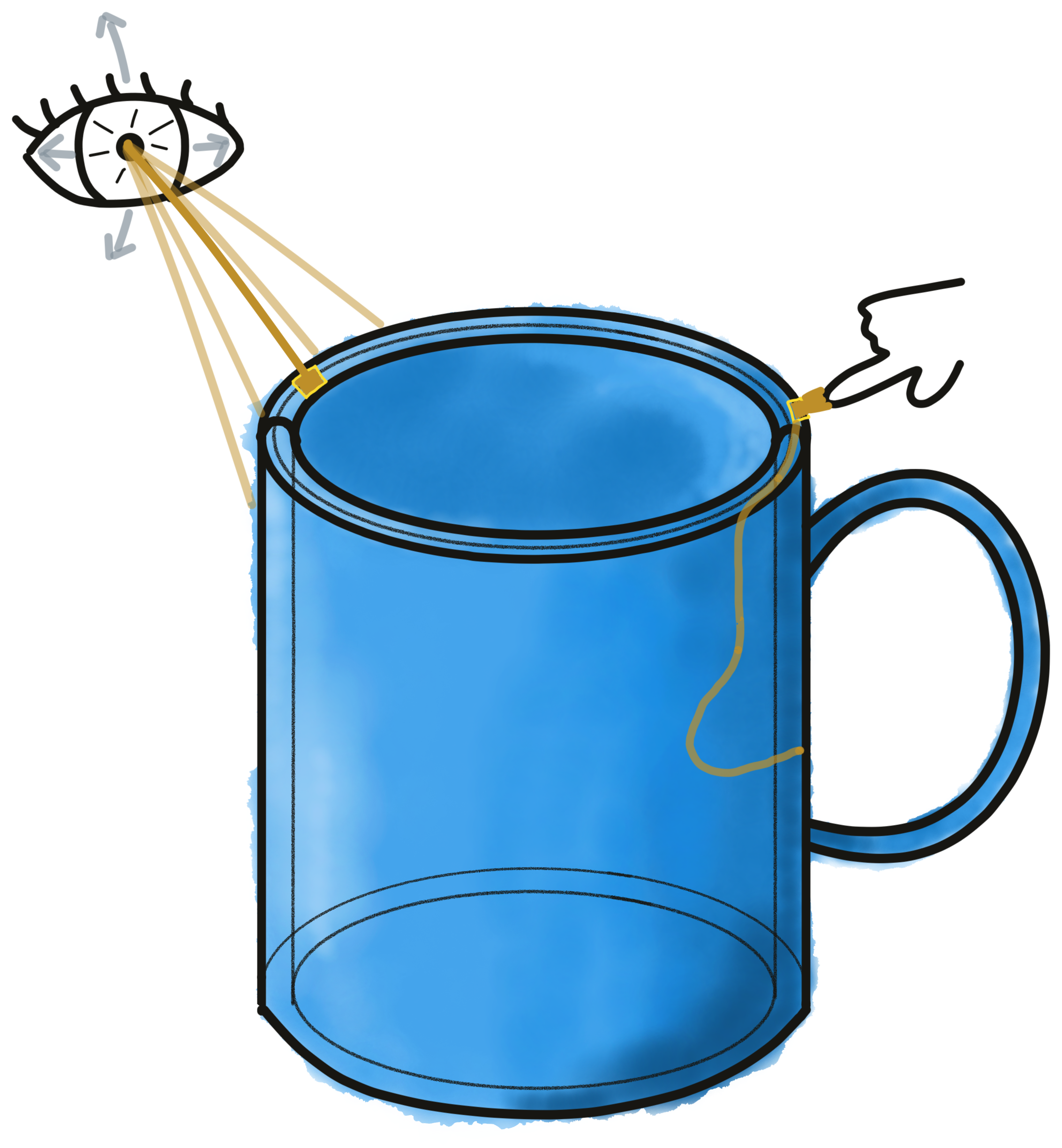

Tutorials are a great way for people to get hands-on-experience with our code and to learn about our approach. They should contain a mix of working code, text explaining the code, and images visualizing the concepts explained and any results from executing the code. They should be an easy-to-follow-along resource for people who are new to this project. They should not require someone to have read the rest of the documentation but can link to other documentation pages for further reading.

We deeply appreciate people who take the time to write tutorials for others. Especially if you have just come to this project recently you may be the best person to explain the code to other new-comers as you are coming to it with a fresh mind and remember the concepts you struggled with.

If you like to contribute a tutorial on a specific topic, the best place to start is to have a look at our existing tutorials to get an idea of how you can structure it. Then you can create a new .md file inside the tutorials folder and write your tutorial. Please make sure to include working code so that people can just copy it into a Python file and follow along. Also include visuals wherever possible. See our contributing documentation guide for more details. Finally, don't forget to add your tutorial and a short description to the list of tutorials here.

Welcome, and thank you for your interest in contributing to Monty!

We appreciate all of your contributions. Below, you will find a list of ways to get involved and help create AI based on principles of the neocortex.

There are many ways in which you can contribute to the code. For some suggestions, see the Contributing Code Guide.

Monty integrates code changes using GitHub Pull Requests. For details on how Monty uses Pull Requests, please consult the Contributing Pull Requests guide.

This project has many aspects and we try to document them all here in sufficient detail. However, you are the ultimate judge of whether this documentation is sufficient and what kind of information is missing. We try to update the documentation based on questions asked on our communication channels but we are always happy to get more help with this.

Also, if you are contributing to our code, it helps everyone else to include a corresponding update to the documentation in your PR.

Please see our guide on contributing documentation.

As a Monty user or contributor, you can help others become familiar with different aspects of Monty. We are always looking for new approaches to ease the introduction to Monty and its concepts. See the Contributing Tutorials guide on how you could add easy-to-follow tutorials for other users. You can also become active on our forum and help answer questions from others.

You can find the researchers, developers, and users of Monty on the Thousand Brains Forum. Please join us there for active discussion on all things Monty and Thousand Brains.

The vision of this project is to implement a generally intelligent system that can solve a wide variety of sensorimotor tasks (at a minimum, any task the neocortex can solve/humans can perform with ease). To evaluate this, we are continually looking for test beds in which we can assess the system's capabilities. If you have an idea of how to test an important capability or you have an existing benchmark on which you want to compare our algorithm, please consider contributing these.

If you encounter any unexpected behavior, please consider creating a new Bug Report issue if it has not yet been reported.

We value your feedback and insights. If you have suggestions, ideas, or questions, feel free to comment on existing issues. Engaging in discussions helps shape Monty's direction and ensures that the community's needs and concerns are considered. For further details, please refer to the Commenting on Issues Guide.

Reviewing Pull Requests is a great way to contribute to the Monty project and get familiar with the code. By participating in the review process, you help ensure the quality, security, and functionality of the Monty codebase. For details on conducting a review, please refer to the Pull Request Review Guide

We look forward to your ideas. For smaller changes, consider creating a new Feature Request issue and Submit a Pull Request (see below). For substantial changes, we have a Request For Comments (RFC) process designed to let Core Maintainers understand and comment on your idea before starting implementation. If you are unsure, go ahead and create a new Feature Request issue, and if it can benefit from an RFC, Maintainers will comment and let you know.

We love seeing how you use Monty. If you created something interesting with it, whether a project, research paper, application, or blog post, share it with us.

- Showcase Your Projects: Submit your projects to be featured on our Showcase Page. This is a great way to highlight your work and inspire others.

- Write a Blog Post: Share your experience and insights by writing a blog post. Please share your post with the community on our Discourse server.

- Publish a Paper: If you use our Monty implementation or ideas from the Thousand Brains Theory in your next publication, we would like to feature you on our TBP-based papers list and increase the visibility of your research.

- Present at Community Events: We host regular webinars and community meetups. If you are interested in presenting your project or research, please get in touch with us at [email protected].

- Social Media: Share your creations on social media using the hashtag

#1000brainsproject. Follow us on X, Bluesky or LinkedIn, and subscribe to our YouTube Channel, or our email list.

Thank you for being a member of our community. By using Monty, you are already promoting Monty and the Thousand Brains Project. If you like our project, we are happy to see you mention us in your social media posts or privately to friends and colleagues.

If you want to discuss further opportunities, such as mentioning us in a blog post or newspaper article or recording an interview with us, don't hesitate to contact [email protected].

If you are a research lab, government institution, or company and you think a closer collaboration with our team could be mutually beneficial, please reach out to us at [email protected].

📘 This page is about contributing Documentation

For current Documentation see Getting Started

Our documentation is held in Markdown files in the Monty repo under the /docs folder. This documentation is synchronized to readme.com for viewing whenever a change is made. The order of sections, documents, and subdocuments is maintained by a hierarchy file called /docs/hierarchy.md. This is a fairly straightforward Markdown document that is used to tell readme how to order the categories, documents and sub-documents.

📘 Edits to the documentation need to be submitted in the form of PRs to the Monty repository.

We use Vale to lint our documentation. The linting process checks for spelling errors and ensures that headings follow the APA title case style.

The linting rules are defined in the /.vale/ directory.

You can add new words to the dictionary by adding them to .vale/styles/config/vocabularies/TBP/accept.txt - for more information see Vale's documentation.

-

Install Vale Download Vale from its installation page.

-

Run Vale Use the following command in your terminal to run Vale:

vale .Example output:

➜ tbp.monty git:(main) vale . ✔ 0 errors, 0 warnings and 0 suggestions in 141 files.

Links to other documents should use the standard Markdown link syntax, and should be relative to the documents location.

relative link in the same directory

[Link Text](placeholder-example-doc.md)

a relative link, with a deep-link to a heading

[Link Text](../contributing/placeholder-example-doc.md#relative-links)These links will work even if you're on a designated version of the documentation.

This is the simplest flow. To modify a document simply edit the Markdown file in your forked version of the Monty repository and commit the changes by following the normal Pull Requests process.

To create a new document, create the new file in the category directory, then add a corresponding line in the /docs/hierarchy.md file.

# my-category: My Category

- [my-new-doc](/my-category/new-placeholder-example-doc.md)

- [some-existing-doc](/my-category/placeholder-example-doc.md)Then, create your Markdown document /docs/my-category/new-placeholder-example-doc.md and add the appropriate Frontmatter.

---

title: 'New Placeholder Example Doc'

---

# My first heading🚧 Quotes

Please put the title in single quotes and, if applicable, escape any single quotes using two single quotes in a row. Example:

title: 'My New Doc''s'

🚧 Your title must match the URL-safe slug

If your title is

My New Doc'sthen your file name should bemy-new-docs.md

Continue with the Pull Requests process.

Documents that are nested under other documents require that you create a folder with the same name as the parent document but without the .md extension. Then, you place any sub-documents in that folder. For example, if you were creating a document called new-placeholder-example-doc.md beneath the document Category One/some-existing-doc.md file you would create a folder called category-one/some-existing-doc and place the new document in that folder.

And then update the hierarchy.md file

# category-one: Category One

- [some-existing-doc](category-one/some-existing-doc.md)

- [new-doc](category-one/some-existing-doc/new-placeholder-example-doc.md)

# category-two: Category Two

...Continue with the Pull Requests process.

If the move is within a category or sub-pages within a page, you can simply edit the hierarchy.md file and update the locations by moving the lines around.

If you are changing the parent path of a document (i.e., sub-page -> page, or page -> sub-page, or page/sub-page -> new category, or sub-page -> new page, then along with updating the hierarchy.md file you also must update the folder structure to make sure the document is correctly located. The sync tool will fail with a pre-check error saying there is a mismatch between the hierarchy file and the location on disk if they do not match up.

Continue with the Pull Requests process.

🚧 You cannot reorder categories as the readme.com API does not support this.

Changes to the category order should be done in the readme.com UI and reflected in the

hierarchy.mdfile.

If a document is well established (it has been around for more than 6 months), people may be using permalinks to it. Therefore, it is a good idea to create a redirect file rather than deleting or renaming it. To do this, set the document to hidden with a relocation link to a relevant area or new document location. Hidden files are reachable from the URL, just not shown in the navigation.

---

title: 'Badly Named Doc'

hidden: true

---

> ⚠️ this document has moved to <insert link>Continue with the usual Pull Requests process.

To create a new category, simply create a new folder inside the /docs folder and add a reference to it in the hierarchy.md file. Categories in the hierarchy file need a slug and title separated by a colon.

# category-one: Category One

# category-two: Category TwoIn our documentation sync tool there is a flag to check internal links, image references and hierarchy file references. This is a good way to ensure that all links are working correctly before submitting a PR.

To check the links, activate the conda environment, and then run the following command:

python -m tools.github_readme_sync.cli check docs

Note

See the readme sync tool documentation for more details on how to use it and how to install the additional dependencies for it.

See the Style Guide images section for details about creating and referencing images correctly.

👍 You have access to VS Code snippets

When you checkout the repository, you have access to markdown snippets for tables, code blocks, warnings and more. While your cursor is in a markdown file, press CMD + Shift + P and select Insert snippet to select a desired documentation snippet.

The documentation Style Guide

The Monty documentation uses the first two parts of semantic versioning (semver), as there is nothing to document for patch changes. You can read about semver here https://semver.org/.

Monty uses GitHub Pull Requests to integrate code changes.

Before we can accept your contribution, you must sign the Contributor License Agreement (CLA). You can view and sign the CLA now or wait until you submit your Pull Request.

See the Contributor License Agreement page for more on the CLA.

Before submitting a Pull Request, you should set up your development environment to work with Monty. See the development Getting Started guide for more information.

- Identify an issue to work on.

- Ensure your fork has the latest upstream

mainbranch changes (if you don't have a fork of the Monty repository or aren't sure, see the development Getting Started guide):git checkout main git pull --rebase upstream main

- Create a new branch on your fork to work on the issue:

git checkout -b <my_branch_name>

- Implement your changes. Keep in mind any tests or benchmarks that you may need to add or update.

- If you've added/deleted/modified code, test your changes locally via:

pytest

- Push your changes to your branch on your fork:

git push

- Create a new GitHub Pull Request from your fork to the official Monty repository.

- Respond to and address any comments on your Pull Request. See Pull Request Flow for what to expect.

- Once your Pull Request is approved, it will be merged by one of the Maintainers. Thank you for contributing! 🥳🎉🎊

- It is recommended to add unit tests for any new feature you implement. This makes sure that your feature continues to function as intended when other people (or you) make future changes to the code. To get a detailed coverage report use

pytest --cov --cov-report html. - Run

pytest,ruff check, andruff formatto make sure your changes don't break any existing code and adhere to our style requirements. If your code doesn't pass these, it can not be merged. - Make sure that your code is properly documented. Please refer to our Style Guide for instructions on how to format your comments.

- If applicable, please also update or add to the documentation on readme.com. For instructions on how to do this, see our guide on contributing documentation.

- Use callbacks for logging, and don’t put control logic into logging functions.

- Note that the random seed in Monty is handled using a generator object that is passed

where needed, i.e. by initializing the random number generator with

This rng is then passed to the various classes, and can be accessed in the sensor modules, learning modules, and motor system with self.rng. Thus to use a random numpy method, call it with e.g.

rng = np.random.RandomState(experiment_args["seed"])

self.rng.uniform()rather thannp.random.uniform()

Note

This page is about contributing RFCs

For existing RFCs, see the rfcs folder.

For RFCs in progress, see issues with rfc:proposal label.

The Request for Comments (RFC) process is intended to provide a consistent and controlled path for substantial changes to Monty so that all stakeholders can be confident about the project's direction. It should also help avoid situations where a contributor spends a lot of time implementing a feature or change that eventually does not get merged.

Many changes, including bug fixes, smaller changes, and documentation improvements, can be implemented and reviewed via Pull Requests.

Substantial changes should undergo a design process and create consensus among the Monty community and Maintainers.

If you are unsure whether you intend to work on a substantial change, create a new Feature Request issue. Maintainers will comment and let you know if it can benefit from an RFC.

The process here is intended to be as lightweight as reasonable for the present circumstances and not impose more structure than necessary. If you feel otherwise, please consider creating an RFC to update this process.

You need to follow this process if you intend to make substantial changes to Monty or its associated open-source framework and workflows. What constitutes a substantial change is evolving based on community norms and varies depending on what part of the ecosystem you are proposing to change, but may include the following:

- Any fundamental iteration of the Monty architecture

- Removing Monty features

- Any changes to the Cortical Message Protocol (CMP)

- Breaking changes to the API

- Diverging from Monty's brain-inspired philosophy and principles

Some changes do not require an RFC:

- Rephrasing, reorganizing, refactoring, or otherwise "changing shape does not change meaning."

- Additions that strictly improve objective, numerical quality criteria (warning removal, speedup, better platform coverage, more parallelism, etc.)

If you submit a pull request for a substantial change without going through the RFC process, it may be closed with a request to submit an RFC first.

A hastily proposed RFC can hurt its chances of acceptance. Low-quality proposals, proposals for previously rejected features, or those that don't fit into the near-term roadmap may be quickly rejected, which can demotivate the unprepared contributor. Laying some groundwork ahead of the RFC can make the process smoother. Please have a look at some of our past RFCs to see their scope. rfcs folder.

Although there is no single way to prepare for submitting an RFC, it is generally a good idea to pursue feedback from other project developers beforehand to ascertain that the RFC may be desirable; having a consistent impact on the project requires concerted effort toward consensus-building.

The most common preparation for writing and submitting an RFC is discussing the idea on the Monty Researcher/Developer Forum or submitting an issue or feature request.

To contribute a substantial change to Monty, you must first merge the RFC into the Monty repository as a markdown file. At that point, the RFC is active and may be implemented with the goal of eventual inclusion into Monty.

- Fork the Monty repository (see the development Getting Started guide)

- Copy one of the templates. The templates are intended to get you started from something other than a blank page. You can use the minimal template

rfcs/0000_minimal_template.md, or for more detailed proposals, you can use the comprehensive templaterfcs/0000_comprehensive_template.md. Whichever template you use, copy it torfcs/0000_my_proposal.md(where "my_proposal" is a short but descriptive title). Don't assign an RFC number yet. The file will be renamed accordingly if the RFC is accepted. - Author the RFC. Consider your proposal. Please feel free to include images, diagrams, or examples if they help explain your proposal. For an example of a small accepted RFC, see rfcs/0005_easier_rfcs.md. For an example of a comprehensive accepted RFC, see rfcs/0004_action_object.md.

- Submit an RFC Pull Request. As a pull request, the RFC will receive design feedback from the broader community, and you should be prepared to revise it in response. Title your pull request starting with

RFCand the title of your proposal. For example,RFC My Proposal. - Each RFC Pull Request will be triaged, given an

rfc:proposallabel, and assigned to a Maintainer who will serve as your primary point of contact for the RFC. If you are a Maintainer and you wrote the RFC, you are the point of contact and you should assign the RFC Pull Request to yourself. - Build consensus and integrate feedback. RFCs with broad support are much more likely to make progress than those that don't receive any comments. Contact the RFC Pull Request assignee for help identifying stakeholders and obstacles.

- In due course, one of the Maintainers will propose a "motion for final comment period (FCP)" along with the disposition for the RFC (merge or close). If you are a Maintainer and the author of the RFC, you can propose the FCP yourself.

- This can happen quickly, or it can take a while.

- This step is taken when enough of the tradeoffs have been discussed so that the Maintainers can decide. This does not require consensus amongst all participants in the RFC thread (which is usually impossible). However, the argument supporting the disposition of the RFC needs to have already been clearly articulated, and there should not be a strong consensus against that position. Maintainers use their best judgment in taking this step, and the FCP itself ensures there is ample time and notification for stakeholders to push back if it is made prematurely.

- For RFCs with lengthy discussions, the motion for FCP is usually preceded by a summary comment that attempts to outline the current state of the discussion and major tradeoffs/points of disagreement.

- The FCP lasts until all Maintainers approve or abstain from the disposition. This way, all stakeholders can lodge any final objections before reaching a decision.

- Once the FCP elapses, the RFC is either merged or closed. If substantial new arguments or ideas are raised, the FCP is canceled, and the RFC goes back into development mode. The assigned Maintainer is the one responsible for merging or closing.

[!NOTE] Maintainers

- Prior to merging the Pull Request, make one last commit:

- Assign the next available sequential number to the RFC

- Rename Pull Request to include the RFC number, e.g., RFC 4 Action Object

- Rename the

rfcs/0000_my_proposal.mdaccordingly. - Update asset folder and any links to assets in that folder if present, like

rfcs/0000_my_proposal/ - Provide the link to the RFC Pull Request in the

RFC PRfield at the top of the RFC text

- Merge the Pull Request. The commit message should consist of the

rfc:prefix, RFC number, title, and pull request number, e.g.,rfc: RFC 3 No Three Day Wait (#366) - Create an Issue in the project that tracks implementation of the merged RFC to fulfill the "Every accepted RFC has an associated issue, tracking its implementation in the Monty repository" requirement. Issue title should be RFC number, title, and the word "Implementation", e.g.,

RFC 4 Action Object Implementation.

- Prior to merging the Pull Request, make one last commit:

Once an RFC becomes active then contributors may implement it and submit the implementation as a pull request to the Monty repository. Being active does not guarantee the feature will ultimately be merged. It does mean that in principle, all the major stakeholders have agreed to the feature and are amenable to merging it.

Furthermore, the fact that a given RFC has been accepted and is active implies nothing about what priority is assigned to its implementation, nor does it imply anything about whether a Maintainer has been assigned the task of implementing it. While it is not necessary that the author of the RFC also write the implementation, it is by far the most effective way to see an RFC through to completion: Authors should not expect that others will take on responsibility for implementing their accepted RFC.

Modifications to active RFCs can be done in follow-up Pull Requests. We strive to write each RFC in a manner that will reflect the final design of the feature. Still, the nature of the process means that we cannot expect every merged RFC to reflect what the end result will be at the time of the next major release; therefore, we try to keep each RFC document somewhat in sync with how the feature is actually being implemented, tracking such changes via follow-up pull requests to the document.

In general,** once accepted, RFCs should not be substantially changed**. Only very minor changes should be submitted as amendments. More substantial changes should be new RFCs, with a note added to the original RFC. Exactly what counts as a very minor change is up to the Maintainers to decide.

While the RFC pull request is open, Maintainers may schedule meetings with the author and relevant stakeholders to discuss the issues in greater detail. A summary from each meeting will be posted back to the RFC pull request.

Maintainers make final decisions about RFCs after the benefits and drawbacks are well understood. These decisions can be made at anytime and Maintainers will regularly make them. When a decision is made, the RFC pull request will be merged or closed. In either case, if the reasoning from the thread discussion is unclear, Maintainers will add a comment describing the rationale for the decision.

Some accepted RFCs represent vital features that need to be implemented right away. Other accepted RFCs can represent features that can wait until someone feels like doing the work. Every accepted RFC has an associated issue, tracking its implementation in the Monty repository.

The author of an RFC is not obligated to implement it. Anyone, including the author, is welcome to submit an implementation for review after the RFC has been accepted.

If you are interested in working on the implementation of an active RFC, but cannot determine if someone else is already working on it; feel free to ask (e.g., by leaving a comment on the associated issue).

See the Code Style Guide.

See the Typing Guide.

We use GitHub Actions to run our continuous integration workflows.

The workflow file name is the workflow name in snake_case, e.g., potato_stuff.yml.

The workflow name is a human-readable descriptive Capitalized Case name, e.g.,

name: Docsname: Montyname: Toolsname: Potato StuffThe job name when in position of a key in a jobs: dictionary is a human-readable snake_case ending with _<workflow_name>.

When used as a value for the name: property, the job name is human-readable kebab-case ending with -<workflow-name>, e.g.,

jobs:

check_docs:

name: check-docsjobs:

install_monty:

name: install-montyjobs:

test_tools:

name: test-toolsjobs:

check_style_potato_stuff:

name: check-style-potato-stuffIn general we try and stick to native markdown syntax, if you find yourself needing to use HTML, please chat with the team about your use case. It might be something that we build into the sync tool.

In a document your first level of headings should be the # , then ## and so on. This is slightly confusing as usually # is reserved for the title, but on readme.com the h1 tag is used for the actual title of the document.

Use headings to split up long text blocks into manageable chunks.

Headings can be referenced in other documents using a hash link [Headings](doc:style-guide#headings). For example Style Guide - Headings

All headings should use capitalization following APA convention. For detailed guidelines see the APA heading style guide and this can be tested with the Vale tool and running vale . in the root of the repo.

Footnotes should be referenced in the document with a [1] notation that is linked to a section at the bottom # Footnotes

For example

This needs a footnote[1](#footnote1)

# Footnotes

<a name="footnote1">1</a>: Footnote text

Images should be placed in /docs/figures in the repo.

Images use snake_case.ext

Images should generally be png or svg formats. Use jpg if the file is actually a photograph.

Upload high quality images as people can click on the image to see the larger version. You can add style attributes after the image path with #width=300px or similar.

For example, the following markdown creates the image below:

Warning

Caption text is only visible on readme.com

You can inline CSV data tables in your markdown documents. The following example shows how to create a table from a CSV file:

!table[../../benchmarks/example-table-for-docs.csv]

The CSV contains the following data:

Year, Avg Global Temp. (°C), Pirates | align right | hover Pirate Count

1800, 14.3, 50 000

1850, 14.4, 15 000

1900, 14.6, 5 000

1950, 14.8, 2 000

2000, 15.0, 500

2020, 15.3, 200

Which produces the following table:

!table[style-guide.csv]

Note that the CSV header row has bar separated syntax that allows you to specify the alignment of the columns left or right and the hover text.

Readme supports four color coded callouts

> 👍 Something good

👍 Something good

> 📘 Information

📘 Information

> ⚠️ Warning

⚠️ Warning

> ❗️ Alert

❗️ Alert

Billions of people use commas as a thousands separator, and billions use the period as the thousands separator. As this documentation is expected to be widely used, we will use space as the separator, as this is the internationally recommended convention.

For example, 1 million is written numerically as 1 000 000.

Note

For an architecture overview see the Architecture Overview page. Each of the major components in the architecture can be customized. For more information on how to customize different modules, please see our guide on Customizing Monty.

There are many ways in which you can contribute to the code. The list below is not comprehensive but might give you some ideas.

- Create a Custom Sensor Module: Sensor Modules are the interface between the real-world sensors and Monty. If you have a specific sensor that you would like Monty to support, consider contributing a Sensor Module for it. Also, if you have a good idea of how to extract useful features from a raw stream of data, this can be integrated as a new Sensor Module.

- Create a Custom Learning Module: Learning Modules are the heart of Monty. They are the repeating modeling units that can learn from a stream of sensorimotor data and use their internal models to recognize objects and suggest actions. What exactly happens inside a Learning Module is not prescribed by Monty. We have some suggestions, but you may have a lot of other ideas. As long as a Learning Module adheres to the Cortical Message Protocol and implements the abstract functions defined here, it can be used in Monty. It would be great to see many ideas for Learning Modules in this code base that we can test and compare. For information on how to implement a custom Learning Module, see our guide on Customizing Monty.

- Write a Custom Motor Policy: Monty is a sensorimotor system, which means that action selection and execution are important aspects. Model-based action policies are implemented within the Learning Module's Goal State Generator, but model-free ones, as well as the execution of the suggested actions from the Learning Modules, are implemented in the motor system. Our Thousand Brains Project team doesn't have much in-house robotics experience so we value contributions from people who do.

- Add Support for More Environments: If you know of other environments that would be interesting to test Monty in (whether you designed it or it is a common benchmark environment) you can add a custom

EnvironmentInterfaceto support this environment. - Improve the Code Infrastructure: Making the code easier to read and understand is a high priority for us, and we are grateful for your help. If you have ideas on how to refactor or document the code to improve this, consider contributing. We also appreciate help on making our unit test suite more comprehensive. Please create an RFC before working on any major code refactor.

- Optimize the Code: We are always looking for ways to run our algorithms faster and more efficiently, and we appreciate your ideas on that. Just like the previous point, PRs around this should not change anything in the outputs of the system.

- Add to our Benchmarks: If you have ideas on how to test more capabilities of the system we appreciate if you add to our benchmark experiments. This could be evaluating different aspects in our current environments or adding completely new environments. Please note that in order to allow us to frequently run all the benchmark experiments, we only add one experiment for each specific capability we test and try to keep the run times reasonable.

- Work on an open Issue: If you came to our project and want to contribute code but are unsure of what, the open Issues are a good place to start. See our guide on how to identify an issue to work on for more information.

Monty integrates code changes using GitHub Pull Requests. To start contributing code to Monty, please consult the Contributing Pull Requests guide.

We are excited about all contributors and there may be a wide range of motivations you may have for contributing. Here is a (non exhaustive) list of what those reasons might be and benefits you may have from contributing.

- You are tired of incremental progress on ANN benchmarks.

- You don't believe that LLMs are the path to true machine intelligence/understanding the brain.

- You want to do exciting research but don't have a big compute budget.

- You are looking for a wide open space to explore new ideas.

- You want to solve tasks where little training data is available.

- You want to solve sensorimotor tasks.

- You want to solve a task that requires quick, continuous learning and adaptation.

- You want to better understand the brain and principles underlying our intelligence.

- You want to work on the future of AI.

- You want to be part of a truly unique and special project.

Here is a list of concrete output you may get out of working on this project.

- Write a publication.

- Write your bachelor or master thesis on the thousand brains approach.

- Be part of an awesome community.

- Have your project showcased on our showcase page.

- Have your paper listed on our TBP based papers page.

- Become a code contributor.

- Lastly, for those out there who love achievements, note that when an RFC you have made is merged and active, you can get a player icon of your choice on our project roadmap. Maybe we'll see you there soon? 🎯

As we are putting this code under an MIT license and Numenta has put its related patents under a non-assert pledge, people can also build commercial applications on this framework. However, the current code is very much research code and not an out-of-the-box solution so it will require significant engineering effort to tailor it to your application.

Note

For Maintainers

The philosophy behind triage is to check issues for validity and accept them into the various Maintainer workflows. Triage is not intended to engage in lengthy discussions on Issues or review Pull Requests. These are separate activities.

The typical triage outcomes are:

- Label and accept the Issue or Pull Request with

triaged. - Label, request more information, and mark the Issue with

needs discussionandtriaged. - Reject by closing the Issue or Pull Request with

invalid.

Note

Triage link (is issue, is open, is not triaged)

https://github.com/thousandbrainsproject/tbp.monty/issues?q=is:issue+is:open+-label:triaged

The desired cadence for Issue Triage is at least once per business day.

A Maintainer will check the Issue for validity.

Do not reproduce or fix bugs during triage.

A short descriptive title.

Ideally, the Issue creator followed the instructions in the Issue templates.

If not, and more information is needed, do not close the Issue. Instead, proceed with triage, request more information by commenting on the Issue, and add a needs discussion label to indicate that additional information is required. Remember to add the triaged label to indicate that the Issue was triaged after you applied any additional labels.

A valid Issue is on-topic, well-formatted, contains expected information, and does not violate the code of conduct.

Multiple labels can be assigned to an Issue.

bug: Apply this label to bug reports.documentation: Apply this label if the Issue relates to documentation without affecting code.enhancement: Apply this label if the Issue relates to new functionality or changes in functional code.infrastructure: Apply this label if the Issue relates to infrastructure like GitHub, continuous integration, continuous deployment, publishing, etc.invalid: Apply this label if you are rejecting the Issue for validity.needs discussion: Apply this label if the Issue is missing information to determine what to do with it.triaged: At a minimum, apply this label if the Issue is valid and you have triaged it.

Do not assign priority or severity to Issues (see: RFC 2 PR and Issue Review).

Do not assign Maintainers to Issues. Issues remain unassigned so that anyone can work on them (see: RFC 2 PR and Issue Review).

If you feel that someone should be notified of the Issue, make a comment and mention them in the comment.

The desired cadence for Pull Request Triage is at least once per business day.

First, review any Pull Requests pending CLA.

Note

Pending CLA link (is pull request, is open, is not a draft, is not triaged, is pending CLA)

If the Pull Request CLA check is passing (you may need to rerun the CLA check), remove the cla label.

Note

Triage link (is pull request, is open, is not a draft, is not triaged, is not pending CLA)

First, check if the Pull Request CLA check is passing. If the check is not passing, add the cla label and move on to the next Pull Request. The skipped Pull Request will be triaged again after the CLA check is passing.

A Maintainer will check the Pull Request for validity.

There is no priority or severity applied to Pull Requests.

A valid Pull Request is on-topic, well-formatted, contains expected information, does not depend on an unmerged Pull Request, and does not violate the code of conduct.

A Draft Pull Request is ignored and not triaged.

A short descriptive title.

If the Pull Request claims to resolve an Issue, that Issue is linked and valid.

If the Pull Request is standalone, it clearly and concisely describes what is being proposed and changed.

If the Pull Request is related to a previous RFC process, the RFC document is referenced.

Pull Request branches from a recent main commit.

Pull Request does not depend on another unmerged Pull Request.

Note

Pull Requests that depend on unmerged Pull Requests add unnecessary complexity to the review process: Maintainers must track the status of multiple Pull Requests and re-review them if the dependent Pull Request is updated. Such dependency is much easier for the Pull Request author to track and to submit the Pull Request after all dependent code is already merged to main.

It is OK if the commit history is messy. It will be "squashed" when merged.

Multiple labels can be assigned to a Pull Request. For example, an enhancement can come with documentation and continue along the Pull Request Flow after being triaged.

cla: Apply this label if the Pull Request CLA check is failing.documentation: Apply this label if the Pull Request relates to documentation without affecting code.enhancement: Apply this label if the Pull Request implements new functionality or changes functional code.infrastructure: Apply this label if the Pull Request concerns infrastructure such as GitHub, continuous integration, continuous deployment, publishing, etc.invalid: Apply this label if you are rejecting the Pull Request for validity.rfc:proposal: Apply this label if the Pull Request is a Request For Comments (RFC).triaged: At a minimum, apply this label if the Pull Request is valid, you triaged it, and it should continue the Pull Request Flow.

Do not assign priority or severity to Pull Requests.

Use your judgement to assign the Pull Request to one or more Maintainers for review (this is the GitHub Assignees feature). If you are the author, you may not assign the Pull Request to yourself. Note that for RFCs, the meaning of the Assignees list is different (see Request For Comments (RFC)).

Use your judgement to request a review for the Pull Request from one or more people (this is the GitHub Reviewers feature).

If you feel that someone should be notified of the Issue, make a comment and mention them in the comment.

No. Multiple people can work on the same issue, and different people can submit multiple pull requests for the same issue. Ultimately, which pull request will be merged will be decided by Maintainers during the Review. However, to avoid double efforts, it is encouraged to comment on an issue when you start working on it to inform others about this. Also, if you decide to stop working on an issue, it is helpful to leave a comment so that someone else can pick up the issue and know what roadblocks you may have hit.

If you have nothing specific to work on or want to become more familiar with the project, you can start by finding existing issues in the Issue Tracker. Every triaged issue contains labels that provide additional information.

- Issues with a

good first issuelabel should be appropriate for first-time committers and don't usually require broad changes across the code base or a deep understanding of the algorithms intricacies.

If you decide to start work on one of the issues, please leave a comment to let Maintainers and others know.

Before creating a new issue, please check if a similar issue exists in the Issue Tracker. Perhaps someone already reported it, and someone might even be working on it.

If you do not see a similar issue reported, please create a New issue using one of the provided templates.

The future work documents have special Frontmatter metadata that is used to power the future-work widget.

Here is an example of what the Frontmatter fields look like:

---

title: Future Work Widget

rfc: https://github.com/thousandbrainsproject/tbp.monty/blob/main/rfcs/0015_future_work.md

estimated-scope: medium

improved-metric: community-engagement

output-type: documentation

skills: github-actions, python, github-readme-sync-tool, S3, JS, HTML, CSS

contributor: codeallthethingz

status: in-progress

---The following fields are validated against allow lists defined in snippet files to ensure consistency and quality.

Tags is a comma separated list of keywords, useful for filtering the future work items. Edit future-work-tags.md.

!snippet[../../snippets/future-work-tags.md]

Skills is a comma separated list of skills that will be needed to complete this. Edit future-work-skills.md.

!snippet[../../snippets/future-work-skills.md]

Very roughly, how big of a chunk of work is this? Edit future-work-estimated-scope.md.

!snippet[../../snippets/future-work-estimated-scope.md]

Is the work completed, or is it in progress? Edit future-work-status.md.

!snippet[../../snippets/future-work-status.md]

What type of improvement does this work provide? Edit future-work-improved-metric.md.

!snippet[../../snippets/future-work-improved-metric.md]

What type of output will this work produce? Edit future-work-output-type.md.

!snippet[../../snippets/future-work-output-type.md]

Does this work item required an RFC? (These values are processed in the validator.py code) and can be of the form:

https://github\.com/thousandbrainsproject/tbp\.monty/.* required optional not-required

The contributor field should be GitHub usernames, as these are converted to their avatar inside the table.(These values are processed in the validator.py code) and can be of the form:

[a-zA-Z0-9][a-zA-Z0-9-]{0,38}

We follow the PEP8 Python style guide.

Additional style guidelines are enforced by Ruff and configured in pyproject.toml.

To quickly check if your code is formatted correctly, run ruff check in the tbp.monty directory.

We use Ruff to check proper code formatting with a line length of 88.

A convenient way to ensure your code is formatted correctly is using the ruff formatter. If you use VSCode, you can get the Ruff VSCode extension and set it to format on save (modified lines only) so your code always looks nice and matches our style requirements.

We adopted the Google Style for docstrings. For more details, see the Google Python Style Guide - 3.8 Comments and Docstrings.

After discovering that PyTorch-to-NumPy conversions (and the reverse) were a significant speed bottleneck in our algorithms, we decided to consistently use NumPy to represent the data in our system.

We still require the PyTorch library since we use it for certain things, such as multiprocessing. However, please use NumPy operations for any vector and matrix operations whenever possible. If you think you cannot work with NumPy and need to use Torch, consider opening an RFC first to increase the chances of your PR being merged.

Another reason we discourage using PyTorch is to add a barrier for deep-learning to creep into Monty. Although we don't have a fundamental issue with contributors using deep learning, we worry that it will be the first thing someone's mind goes to when solving a problem (when you have a hammer...). We want contributors to think intentionally about whether deep-learning is the best solution for what they want to solve. Monty relies on very different principles than those most ML practitioners are used to, and so it is useful to think outside of the mental framework of deep-learning. More importantly, evidence that the brain can perform the long-range weight transport required by deep-learning's cornerstone algorithm - back-propagation - is extremely scarce. We are developing a system that, like the mammalian brain, should be able to use local learning signals to rapidly update representations, while also remaining robust under conditions of continual learning. As a general rule therefore, please avoid PyTorch, and the algorithm that it is usually leveraged to support - back-propagation!

You can read more about our views on deep learning in Monty in our FAQ.

All source code files must have a copyright and license header. The header must be placed at the top of the file, on the first line, before any other code. For example, in Python:

# Copyright <YEARS> Thousand Brains Project

#

# Copyright may exist in Contributors' modifications

# and/or contributions to the work.

#

# Use of this source code is governed by the MIT

# license that can be found in the LICENSE file or at

# https://opensource.org/licenses/MIT.The <YEARS> is the year of the file's creation, and an optional sequence or range of years if the file has been modified over time. For example, if a file was created in 2024 and not modified again, the first line of the header should be # Copyright 2024 Thousand Brains Project. If the file has been modified in consecutive years between 2022 and 2024, the header should be # Copyright 2022-2024 Thousand Brains Project. If the file has been modified in multiple non-consecutive years in 2022, then in 2024 and 2025, the header should be # Copyright 2022,2024-2025 Thousand Brains Project.

In other words, if you are creating a new file, add the copyright and license header with the current year. If you are modifying an existing file and the header does not include the current year, then add the current year to the header. You should never need to modify anything aside from the year in the very first line of the header.

Note

While we deeply value and appreciate every contribution, the source code file header is reserved for essential copyright and license information and will not be used for contributor acknowledgments.

The key words "MUST", "MUST NOT", "REQUIRED", "SHALL", "SHALL NOT", "SHOULD", "SHOULD NOT", "RECOMMENDED", "MAY", and "OPTIONAL" in this document are to be interpreted as described in BCP 14, RFC 2119, RFC 8174 when, and only when, they appear in all capitals, as shown here.

This guidance does not dictate the only way to implement functionality. There are many ways to implement any particular functionality, each of which will work. This guidance establishes constraints so that as more functionality is implemented and functionality is changed, it remains as easy as it was with the first piece of functionality.

Please note that there are differences between research and platform requirements. While research needs speed and agility, the platform needs modularity and stability. These are different and can conflict. The guidance here is for the platform. If you are a researcher, you MAY ignore this guidance in your prototype and code in the way most effective for you and your task. Later, if your prototype works and needs to be integrated into Monty, then we will refactor the prototype to correspond to the guidance here.

Why? We want to move to Protocols eventually. Keeping the abstract classes free of implementation makes it easier to transition to Protocols in the future. Whereas, having an abstract class with some implementation requires additional refactoring when we transition to Protocols.

Why do we want to move to Protocols eventually? We want to catch errors as early as possible, and using Protocols allows us to do this at type check time. Using abstract classes delays this until class instantiation, once the program runs.

# ABCs raises errors during instantiation when/if constructor is called:

class Monty(ABC):

def implemented(self):

pass

@abstractmethod

def unimplemented(self):

pass

class DefaultMonty(Monty):

pass

def invoke(monty: Monty):

monty.unimplemented()

monty = DefaultMonty() # runtime error & fails type check

invoke(monty) # OK, no type error

# ---

# Typical inheritance raises errors during runtime when monty.unimplemented() is called:

class Monty:

def implemented(self):

pass

def unimplemented(self):

raise NotImplementedError

class DefaultMonty(Monty):

pass

def invoke(monty: Monty):

monty.unimplemented() # runtime error

monty = DefaultMonty() # OK, no type error

invoke(monty) # OK, no type error

# ---

# Protocols raise errors during type check when attempting use:

class MontyProtocol(Protocol):

def implemented(self): ...

def unimplemented(self): ...

class DefaultMonty:

def implemented(self):

pass

def invoke(monty: MontyProtocol):

monty.unimplemented() # runtime error

monty = DefaultMonty() # OK

other: MontyProtocol = DefaultMonty() # fails type check

invoke(monty) # fails type checkWhile abstract classes MAY be used, you SHOULD prefer Protocols.

Protocols document a behaves-like-a relationship.

Why: We want to catch errors as early as possible, and using Protocols allows us to do this at type check time. Using abstract classes delays this until class instantiation, once the program runs.

There is no material difference in the context of usage and expectation documentation between using Protocols and abstract classes. In other contexts, Protocols are favorable because they allow us to raise errors at type check time and, due to structural typing, do not require inheritance.

# Protocols raise errors during type check when attempting use:

class MontyProtocol(Protocol):

def implemented(self): ...

def unimplemented(self): ...

class DefaultMonty:

def implemented(self):

pass

def invoke(monty: MontyProtocol):

monty.unimplemented() # runtime error

monty = DefaultMonty() # OK

other: MontyProtocol = DefaultMonty() # fails type check

invoke(monty) # fails type checkWhy: Inheritance hierarchy allows for overriding methods. As class hierarchies deepen, override analysis becomes more complex. The issue is not how the code functions but the difficulty of reasoning about behavior when multiple layers of overrides are possible. The deeper the hierarchy, the more difficult it is to track what code a specific instance uses, and it makes it unclear where functionality should be overridden. Modifying code with a deep inheritance hierarchy is also complex, in that any change can have cascading effects up and down the hierarchy.

Most of the time, you should default to not using inheritance hierarchy, and instead, reach for other ways to assemble functionality. Inheritance is appropriate for an is-a relationship, but this is quite a rare occurrence in practice. A lot of things seem like they form an is-a relationship, but the odds of that relationship being maintained drop off dramatically as the code evolves and the hierarchy deepens.

class Rectangle:

def __init__(self, length: float, height: float) -> None:

super().__init__() # See "You SHOULD always include call to super().__init__() ..." section below

self._length = length

self._height = height

@property

def area(self) -> float:

return self._length * self._height

class Square(Rectangle):

def __init__(self, side: float) -> None:

super().__init__(side, side)

# So far so good...

# The next day, we want to add resize functionality

class Rectangle:

# --unchanged code omitted-

def resize(self, new_length: float, new_height: float) -> None:

self._length = new_length

self._height = new_height

# But, now this no longer makes sense for the Square

sq = Square(5)

sq.resize(5,3) # ?!As depicted in the example above, if we assume an is-a relationship as the default and reach for inheritance, we can very rapidly introduce functionality that violates the is-a relationship requirement.

Using composition by default instead:

# We want to reuse the area calculating functionality, hence this class

class DefaultAreaComputer:

@staticmethod

def area(length: float, height: float) -> float:

return length * height

class Rectangle:

def __init__(self, length: float, height: float) -> None:

super().__init__()

self._length = length

self._height = height

self._area_computer = DefaultAreaComputer

@property

def area(self) -> float:

return self._area_computer.area(self._length, self._height)

class Square:

def __init__(self, side: float) -> None:

super().__init__()

self._side = side

self._area_computer = DefaultAreaComputer

@property

def area(self) -> float:

return self._area_computer.area(self._side, self._side)

# Now, we want to implement resize for Rectangle

class Rectangle:

# --unchanged code omitted--

def resize(self, new_length: float, new_height: float) -> None:

self._length = new_length

self._height = new_height

# No issues, because we never assumed is-a relationship in the first place.What if we now want to replace the DefaultAreaComputer with a different implementation?

# Implement a different computer

class TooComplicatedAreaComputer:

@staticmethod

def area(length: float, height: float) -> float:

return 4 * (length / 2) * (height / 2)

# Use new computer in Rectangle

class Rectangle:

def __init__(self, length: float, height: float) -> None:

super().__init__()

self._length = length

self._height = height

self._area_computer = TooComplicatedAreaComputer

# --unchanged code omitted--What if we want to make the area computer configurable?

# Define the protocol

class AreaComputer(Protocol):

@staticmethod

def area(length: float, height: float) -> float: ...

# Update Rectangle to accept area computer

class Rectangle:

def __init__(

self,

length: float,

height: float,

area_computer: type[AreaComputer] = TooComplicatedAreaComputer

) -> None:

super().__init__()

self._length = length

self._height = height

self._area_computer = area_computer

# --unchanged code omitted--If we want our code to change rapidly, to try out different ideas, and to configure existing code with these variants, using modular components for functionality reuse instead of inheritance allows for changes to remain small in scope without affecting unrelated functionality up and down the inheritance chain.

Bare Functions or Static Methods SHOULD Be Used to Share a Functionality Implementation That Does Not Access Instance State

Why: Do not require state that you don’t access. Functions without state are vastly easier to reuse, refactor, reason about, and test.

# calculating an area

class DefaultAreaComputer:

@staticmethod

def area(length: float, height: float) -> float:

return length * height

# alternatively

def area(length: float, height: float) -> float:

return length * heightA reason to use a static method on a class over a bare function would be when we want to pass the functionality to another class. This is because we want our configurations to be serializable, and a type is serializable in a more straightforward manner than a Callable would be. For example:

# Static method approach

class Rectangle:

def __init__(

self,

length: float,

height: float,

area_computer: type[AreaComputer] = DefaultAreaComputer # Easier to serialize

) -> None:

super().__init__()

self._length = length

self._height = height

self._area_computer = area_computer

@property

def area(self) -> float:

return self._area_computer.area(self._length, self._height)

# Bare function approach

class Rectangle:

def __init__(

self,

length: float,

height: float,

area_computer: Callable[[float, float], float] = area # More challenging to serialize

) -> None:

super().__init__()

self._length = length

self._height = height

self._area_computer = area_computer

@property

def area(self) -> float:

return self._area_computer(self._length, self._height)To Share a Functionality Implementation That Reads the State of the Instance Being Mixed With, Mixins MAY Be Used

For sharing functionality, mixins only implement a shared behaves-like-a functionality. They add functionality, however, mixins SHALL NOT add state to the instance being mixed with. Every time you find yourself in need of state when working on a mixin, switch to composition instead.

Why: When Mixins do not add state, they are not terrible for implementing shared functionality. That’s why you MAY use them for this. However, when Mixins add state, you must look at two places for the state to understand the implementation instead of one. Having to look in two places is an example of incidental complexity, where it is not inherent to the problem being solved. Incidental complexity should be minimized.

# OK, Mixin only reads state

class RectangleAreaMixin:

@property

def area(self) -> float:

return self._length * self._height

class Rectangle(RectangleAreaMixin):

def __init__(self, length: float, height: float) -> None:

super().__init__()

self._length = length

self._height = height

# ---

# Not OK, Mixin adds state

class RectangleAreaMixin:

def __init__(self, length: float, height: float) -> None:

super().__init__()

self._length = length

self._height = height

@property

def area(self) -> float:

return self._length * self.height

class Rectangle(RectangleAreaMixin):

def __init__(self, length: float, height: float) -> None:

super().__init__(length, height)Composition is used to implement a has-a relationship.

Why: Components encapsulate additional state in a single concept in a single place in the code.

Given a Rectangle that uses a DefaultAreaComputer (because we reuse that functionality elsewhere), let’s say we want to count how many times we resized it.

class DefaultAreaComputer:

@staticmethod

def area(length: float, height: float) -> float:

return length * height

class Rectangle:

def __init__(self, length: float, height: float) -> None:

super().__init__()

self._length = length

self._height = height

self._area_computer = DefaultAreaComputer

self._resize_count = 0 # We track count in Rectangle state

@property

def area(self) -> float:

return self._area_computer.area(self._length, self._height)

def resize(self, new_length: float, new_height: float) -> None:

self._length = new_length

self._height = new_height

self._resize_count += 1 # We update internal state

@property

def resize_count(self) -> int:

return self._resize_count

# Now, we want to reuse the count functionality

# First, we extract/encapsulate the DefaultCounter functionality

class DefaultCounter:

def __init__(self) -> None:

self._count = 0

def increment(self) -> None:

self._count += 1

@property

def count(self) -> int:

return self._count

# We then update Rectangle to use the shared functionality

class Rectangle:

def __init__(self, length: float, height: float) -> None:

super().__init__()

self._length = length

self._height = height

self._area_computer = DefaultAreaComputer

# Note that the count itself (state) is no longer in the Rectangle

self._resize_counter = DefaultCounter() # We track count in DefaultCounter

# --unchanged code omitted--

def resize(self, new_length: float, new_height: float) -> None:

self._length = new_length

self._height = new_height

self._resize_counter.increment() # We update the count

@property

def resize_count(self) -> int:

return self._resize_counter.count

# And now that we extracted the DefaultCounter functionality, we can use it elsewhere

class Circle:

def __init__(self, radius: float) -> None:

super().__init__()

self._radius = radius

# Note that DefaultCounter() introduces new state, but it is

# encapsulated within the component

self._resize_counter = DefaultCounter()

@property

def area(self) -> float:

# We don't need to make everything a component.

# Since we don't reuse circle area functionality anywhere,

# it is OK to have it inline here.

return math.pi * self._radius ** 2

def resize(self, new_radius: float) -> None:

self._radius = radius

self._resize_counter.increment()

@property

def resize_count(self) -> int:

return self._resize_counter.countWhy: This avoids possible issues with multiple inheritance by opting into “cooperative multiple inheritance.”

This should not be an issue once all of our code follows this guidance document, specifically ensuring that Mixins do not introduce state, making Mixins with __init__ unlikely. However, it may be a while before we get there, so this guidance is included.

See https://eugeneyan.com/writing/uncommon-python/#using-super-in-base-classes for additional details, but here are some examples with their corresponding output:

Note

print call occurs after the call to super().__init__(). Output would be in different order if print occurred before the super().__init__() call.

# Correct and expected

class Parent:

def __init__(self) -> None:

super().__init__()

print("Parent init")

class Mixin:

pass

class Child(Mixin, Parent):

def __init__(self) -> None:

super().__init__()

print("Child init")

child = Child()

# Output

# > Parent init

# > Child init

# Also correct and expected

class Parent:

def __init__(self) -> None:

super().__init__()

print("Parent init")

class Mixin:

pass

class Child(Parent, Mixin):

def __init__(self) -> None:

super().__init__()

print("Child init")

child = Child()

# Output

# > Parent init

# > Child initThe problems begin when inherited classes all have __init__ defined.

# Correct and expected

class Parent:

def __init__(self) -> None:

super().__init__()

print("Parent init")

class Mixin:

def __init__(self) -> None:

super().__init__()

print("Mixin init")

class Child(Mixin, Parent):

def __init__(self) -> None:

super().__init__()

print("Child init")

child = Child()

# Output

# > Parent init

# > Mixin init

# > Child init

# Also correct and expected

class Parent:

def __init__(self) -> None:

super().__init__()

print("Parent init")

class Mixin:

def __init__(self) -> None:

super().__init__()

print("Mixin init")

class Child(Parent, Mixin):

def __init__(self) -> None:

super().__init__()

print("Child init")

child = Child()

# Output

# > Mixin init

# > Parent init

# > Child init

# If you skip super().__init__() call in one of the inherited classes, some class __init__ methods are skipped

# class Child(Mixin, Parent) where we skip super().__init__() in Mixin

class Parent:

def __init__(self) -> None:

super().__init__()

print("Parent init")

class Mixin:

def __init__(self) -> None:

# super().__init__() skipped

print("Mixin init")

class Child(Mixin, Parent):

def __init__(self) -> None:

super().__init__()

print("Child init")

child = Child()

# Output

# > Mixin init

# > Child init

# class Child(Parent, Mixin) where we skip super().__init__() in Parent

class Parent:

def __init__(self) -> None:

# super().__init__() skipped

print("Parent init")

class Mixin:

def __init__(self) -> None:

super().__init__()

print("Mixin init")

class Child(Parent, Mixin):

def __init__(self) -> None:

super().__init__()

print("Child init")

child = Child()

# Output

# > Parent init

# > Child initPython initially introduced type hinting in version 3.5 after being defined in PEP 484. The Python interpreter itself generally ignores type hints, so the main way in which they are used is via IDE/LSP support, and through type checking tools like mypy. The thinking was for this system to be optional. Any code that would run before would continue to run after the introduction of type hints. It is also in some ways gradual, in that the entire codebase doesn't have to be fully type hinted before some degree of benefit can be realized.

In the future, we would like to utilize type hints in the Monty codebase. However, there are multiple approaches that can be taken to do this, so we want to provide some guidance to ensure we're adding type hints that are giving us the most benefits.

Python already has a dynamic type system. Variables don't have types which restrict the values that can be assigned to them. The values themselves have types that determine what can be done with them, however any check of those types occur at runtime throwing errors when those checks fail.

Using type hints with a type checker implements a static type system. A static type system assigns types to variables, restricting which values can be assigned to them and which operations can be used on those variables and checking those types ahead of time without running the code.

The main benefit of a static type checker enforcing constraints is that it can prevent errors by checking ahead of time for method calls or operations on a variable that would otherwise throw an error at runtime. Type hints also document what arguments are allowed in methods and what types they return. They can also encode logic into the type system to allow for proving certain properties of the code.

One thing to be aware of, the Python interpreter DOES NOT care about type hints. Anything you could normally do in Python without type hints will still be possible at runtime with type hints. The only value they add is when a type checker is used to confirm that the operations being performed match what the type hints indicate is allowed.

This especially applies to newtypes (see below for details). Newtypes do nothing at runtime. There’s a slight performance hit for the call to the “constructor” of the newtype, but it’s negligible. It is, like the rest of type hinting, just a hint, that the Python interpreter ignores, but a type checker can use to help ensure correctness.

The key words "MUST", "MUST NOT", "REQUIRED", "SHALL", "SHALL NOT", "SHOULD", "SHOULD NOT", "RECOMMENDED", "MAY", and "OPTIONAL" in this document are to be interpreted as described in BCP 14, RFC 2119, and RFC 8174 when, and only when, they appear in all capitals, as shown here.

The type assigned to arguments should be as abstract as possible when specifying the arguments to a method or function. For example, if a method needs some collection of items, instead of specifying the type as a List, use Iterable instead.

# Don't restrict the argument to a List

def double_list(l: List[int]) -> List[int]:

return [x * 2 for x in l]

# Instead, use an appropriate collection type

def double_coll(c: Iterable[int]) -> List[int]:

return [x * 2 for x in c]Using the broadest type that provides the functionality needed allows for more flexibility when calling the function or method. The second example can be called with a set, while the first only works for lists.

The type returned from a method or function should be the most concrete type possible. Similar to the previous example, the return type could be Collection[int], but that type wouldn't allow for calling list-specific methods on the returned value, even though we're returning a list.

Returning the most concrete type gives more flexibility to the caller to use that value in ways that the function/method author might not have considered.

Structural typing is a type system in which the structure of the type is what matters for type checking. Two values with the same structure will type-check as the same type, regardless of any type aliases being used (see the Python glossary for a stricter definition of structural types).

Basic types like str, list, and dict[int, str] are examples of structural types in Python. Nothing about a dict[int, str] indicates what those keys or values represent, and any dictionary that takes integers as keys and has strings as values would type-check against that type.

Type aliases are structural types; they do not lead to nominal types. From the type checker's perspective, they are replaced with the type that they alias. They are only a convenience for not having to write out long structural types.

While Protocols provide structural subtyping, they are an exception to this guidance and SHOULD be used for static duck typing (see the section on duck typing for details).

Structural types SHOULD NOT be used when possible because they don't define the concepts that the types represent, reducing their usefulness.

# Type alias for a quaternion

# This doesn't define a new type, it just allows the function

# definition below to be shorter.

QuaternionWXYZ = Tuple[float, float, float, float]

# Define another one for a different order

QuaternionXYZW = Tuple[float, float, float, float]

def normalize_quaternion(quat: QuaternionWXYZ):

# Based on the type alias, the function expects this

w, x, y, z = quat

return normalized_quaterion

quat: QuaternionXYZW = (0.0, 0.0, 0.0, 1.0)

norm = normalize_quaternion(quat) # This type checks, but gives invalid resultsAlternative ways to model a quaternion are using newtypes or dataclasses, both forms of nominal types (see the section on nominal typing for details on the benefits of nominal types). Which to choose would depend on whether additional functionality is needed, or for easier compatibility with third-party libraries.

@dataclass

class Quaternion:

w: float

x: float

y: float

z: float

# Or

QuaternionWXYZ = NewType("QuaternionWXYZ", Tuple[float, float, float, float])The dataclass approach forces the author to specify which coefficient they want to access, removing ambiguity, while the newtype still requires the author to be careful about which float in the tuple is the one they want, but the name helps indicate which it is. The newtype also prevents passing a raw tuple where a QuaternionWXYZ is expected without explicitly turning it into one.

An exception to this guideline would be the types of internal fields of a class, like the floats in the Quaternion dataclass in the previous example. Oftentimes those don’t need to be anything more specific than the underlying structural type. An example might be a name field on a class, which could simply be a str. Unless there is some extra metadata, e.g. the str is untrusted user input that should be handled carefully, a simple str will suffice. This can also apply to things like lists, sets, and dictionaries.

A rule of thumb in most cases would be to ask whether the type needs to be exposed outside of the class or function in which the variable is defined. Function or method arguments and return types should generally avoid structural types in favor of nominal types.

Nominal typing is a type system in which the name of the type is what matters for type checking. Two values with the same structure but different names are distinct types in this system (see the Python glossary for a stricter definition of nominal type).

More complex types, like dataclasses and regular classes, are examples of nominal types in Python. Two dataclasses with the same fields but different names would type-check as different types. Classes and dataclasses (which are a convenience for defining certain kinds of classes) are more opaque than anything to do with the fields or methods they have. Different classes are different types and the only way instances of those classes can type check as each other is if one is a subclass of another (this is the subtype polymorphism behaviour provided by object-oriented languages).

Python also provides NewTypes which can be used to define nominal types for basic types that would normally be structurally typed. An example of a newtype would be to define the concept of radians and degrees for angles in a system, and ensure that they can't be used in the wrong places.

Radians = NewType("Radians", float)

Degrees = NewType("Degrees", float)

# Both Radians and Degrees are floats, but they cannot be swapped.

def rads_to_degrees(rads: Radians) -> Degrees:

pass

right_angle = Radians(Math.pi / 4)

another_angle = Degrees(180.0)

rads_to_degrees(right_angle) # works fine

# FAILS to typecheck: "Degrees" is not "Radians", etc.

rads_to_degrees(another_angle)

# FAILS to typecheck: "float" is not "Radians", etc.

rads_to_degrees(270.0)Another example would be to codify the order of a quaternion (since there is disagreement between libraries whether to use WXYZ or XYZW) and then check usages (see the quaternion example in the section on structural typing).

Newtypes should be used when no additional functionality beyond the underlying type is needed, but metadata about the type needs to be tracked. An example of this would be unsafe and safe strings in a web application. They shouldn’t be confused because they can lead to security vulnerabilities, but with newtypes we can help the author to think about which is being used.

UnsafeString = NewType("UnsafeString", str)

SafeString = NewType("SafeString", str)

def render_with_content(template: SafeString, content: SafeString):

# This isn't the best way to do this, but it's an example

return format(template, content)

def sanitize_string(s: UnsafeString) -> SafeString:

# do some sanitization

return SafeString(new_s)

unsafe_input: UnsafeString # comes from some user input

template = SafeString("Hello, {}")

# FAILS to typecheck: "UnsafeString" is not "SafeString"

render_with_content(template, unsafe_input)

safe_input = sanitize_string(unsafe_input)

# FAILS to typecheck: "SafeString" is not "UnsafeString"

safe_input = sanitize_string(safe_input)In this example, it becomes more difficult to accidentally pass an unsafe string to a rendering function that could cause security issues, and it’s also difficult to accidentally sanitize a safe string a second time.

Duck typing is a form of structural typing in dynamically typed languages where two different value types can be substituted for each other if they have the same methods. The name comes from the duck test. “If it walks like a duck, swims like a duck, and quacks like a duck, then it probably is a duck.”